Case Study: Stackup

Case Study • July 1, 2016

Introduction

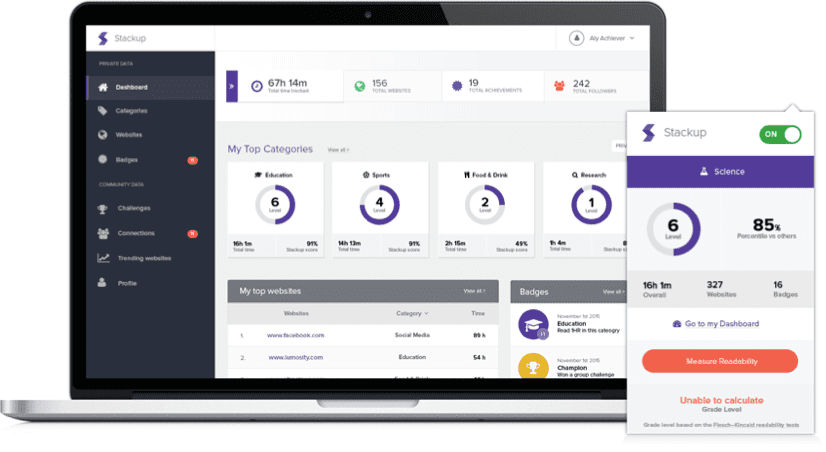

Stackup is a Google Chrome browser plugin and an online dashboard that tracks, measures, and reports online reading for K-12 students. Stackup provides school districts with software to measure reading comprehension progress while giving students the freedom to read what they want online. In 2015, Stackup received funding to execute a redesign project to increase adoption in schools and increase the engagement of its existing users.

My role on the project was Lead Product Designer. I collaborated with students, teachers, school administrators, engineers, product managers, executives, and other key stakeholders to design a new user experience and user interface. I delivered many key UX/UI artifacts including; user testing results, personas, user task flows, wireframes, and UI specifications. I also developed a custom design system to ensure a consistent look and feel was applied throughout the systems that matched the brand and the ideal experience of the end users.

Problem

Students spend a lot of their time outside of the classroom reading online. Traditionally they haven’t received credit for it in the classroom. Further, teachers haven’t had much insight into what their students are reading about outside of the classroom.

Benefit Hypothesis

By creating a benefit hypothesis statement, we can predict the potential benefits that Stackup will provide to its users. This exercise helps to define the goals and objectives of our product and to guide the design and development process.

How Might We Statements

How Might We” (HMW) statements are a tool used in design thinking to frame a problem or challenge in a way that encourages creative thinking and brainstorming of potential solutions. They typically begin with the phrase “How might we…” followed by a statement of the problem or challenge. The goal of HMW statements is to reframe the problem in a way that is open-ended and non-prescriptive, encouraging a wide range of potential solutions to be considered.

Solution

Following a user-centered and Lean UX/UI design thinking process, I broke the solution down into several steps. First, I discovered information about the business and users. Then, I conducted brainstorming exercises to design and test a prototype. Finally, I worked with Engineers to implement a solution. After many months of collaborating as a team, we released the re-designed Stakup browser plugin and web dashboard to the general public.

Project Kickoff

Project Kickoff Workshop

I scheduled a project kickoff meeting to officially launch the project and to ensure that all stakeholders are aligned on the project goals, objectives, timelines, and tasks. The meeting included representatives from the business, engineering team, and other key stakeholders who will be involved in the project. The purpose of the meeting is to introduce the project team members to one another, to review and confirm the project scope and objectives, to discuss the project plan and schedule, to assign roles and responsibilities, and to establish clear lines of communication and reporting. The kickoff meeting is an opportunity to set expectations, align everyone on the project goals, and ensure that the project is off to a good start.

UX/UI Project Plan

Using the information I learned from the project kickoff meeting, I drafted a project plan that included which UX/UI tasks would need to be done for the first release. For this project, I organized the task types into nine categories. Each category was timeboxed to one sprint.

| UX/UI Project Plan | |||

| Business Discovery | |||

|

|

|

09/01/2015 – 09/14/2015 |

| User Discovery | |||

|

|

|

09/15/2015 – 09/30/2015 |

| Content Requirements Discovery | |||

|

|

|

10/01/2015 – 10/14/2015 |

| Information Design | |||

|

|

|

10/15/2015 – 10/30/2015 |

| Interaction Design | |||

|

|

|

11/01/2015 – 11/14/2015 |

| Visual Design | |||

|

|

|

11/15/2015 – 11/30/2015 |

| Dev Hand-Off | |||

|

|

|

12/01/2015 – 12/14/2015 |

| Dev Support | |||

|

|

|

12/15/2015 –

1/14/2015 |

| Validation Research | |||

|

|

|

1/15/2016 –

1/30/2016 |

| Total | |||

|

— | — | Target MVP

Release Date 2/1/2016 |

Project Working Agreements

Lean/Agile Approach

To conclude our project kick-off meeting, we discussed our approach to executing the work. We agreed to track tasks in a Kanban-style backlog and work in two-week sprints. On a daily basis, we would meet as a team to discuss our work in progress and any obstacles we needed help with. We agreed to release an initial Minimally Viable Probuct (MVP) and then continue to deploy enhancements on a routine cadence.

Tools & Technology

From a tactical perspective, we also discussed the tools and technologies that we would use to complete the work. In the requirements exercise, I learned that the Engineers will be using .NET & Angular JS. We also discussed using Bootstrap for the Front End. To complete the high-fidelity interface designs I chose to use Photoshop as I already had a license. Finally, I decided to try using UXPin for the wireframes and prototyping.

Design Approach

From a design implementation perspective, we decided to follow The Elements of User Experience framework by Jesse James Garrett. This framework breaks-down each facet of the design process from concept to completion. It helps us build an experience that’s focused on outcomes, quality, and ease of use. It also helps us stay Lean by eliminating major re-work.

UX/UI Test Plan

To validate the effectiveness of the re-design we chose to track metrics using Google’s HEART Framework. The HEART framework breaks KPI’s down in to 5 categories: Happiness, Engagement, Adoption, Retention, and Task Success. Knowing that Adoption and Engagement were top priorities from the business, the framework aligns well with our desired outcomes.

We decided to focus on tracking Adoption and Engagement first, using quantitative metrics from the Google Analytics Suite. We would also collect qualitative data through Survey Monkey, online forms, and direct emails to customer support, to evaluate users Happiness. In addition to surveys, forms, and email, we would also discover users’ Happiness levels by way of empathy testing.

Empathize

The goal of empathize phase is to understand the users needs and build empathy for what is important to them. I chose to conduct a heuristic evaluation on the existing platform first, to gain context around the system itself. Then, I conducted empathy interviews with real users from each of the groups. During the interviews, I learned about users pain points, preferences, and their thoughts how we can improve the product.

Heuristic Evaluation

Following a Neilsen-based heuristics template, I evaluated the existing browser plugin and web application. I scored each heuristic based on a set of corresponding questions and added notes and screenshots to each category. Finally, I repeated the test four times, once for each of the four existing user types; Student, Teacher, District Administrator, and IT Administrator.

At the conclusion of my analysis, I had discovered many common usability antipatterns. Then, I submitted a single page summary (along with my in-depth analysis) and recommendations to the client. Upon review, we prioritized several improvements to the interface, content, and design.

| Usability Analysis Report | ||

| Visibility of system status | ||

| The system should always keep users informed about what is going on, through appropriate feedback within a reasonable time. | 20/28 | 71% |

| Match between system and the real world | ||

| The system should speak the users’ language, with words, phrases and concepts familiar to the user, rather than system-oriented terms. Follow real-world conventions, making information appear in a natural and logical order. | 11/16 | 69% |

| User control and freedom | ||

| Users often choose system functions by mistake and will need a clearly marked “emergency exit” to leave the unwanted state without having to go through an extended dialogue. Support undo and redo. | 18/24 | 75% |

| Consistency and standards | ||

| Users should not have to wonder whether different words, situations, or actions mean the same thing. Follow platform conventions. | 8/16 | 50% |

| Error prevention | ||

| Even better than good error messages is a careful design which prevents a problem from occurring in the first place. Either eliminate error-prone conditions or check for them and present users with a confirmation option before they commit to the action. | 14/20 | 70% |

| Recognition rather than recall | ||

| Minimize the user’s memory load by making objects, actions, and options visible. The user should not have to remember information from one part of the dialogue to another. Instructions for use of the system should be visible or easily retrievable whenever appropriate. | 23/40 | 58% |

| Flexibility and efficiency of use | ||

| Accelerators — unseen by the novice user — may often speed up the interaction for the expert user such that the system can cater to both inexperienced and experienced users. Allow users to tailor frequent actions. | 16/24 | 67% |

| Aesthetic and minimalist design | ||

| Dialogues should not contain information which is irrelevant or rarely needed. Every extra unit of information in a dialogue competes with the relevant units of information and diminishes their relative visibility. | 14/24 | 58% |

| Error Recovery | ||

| Help users recognize, diagnose, and recover from errors. Error messages should be expressed in plain language (no codes), precisely indicate the problem, and constructively suggest a solution. | 18/24 | 75% |

| Help and documentation | ||

| Even though it is better if the system can be used without documentation, it may be necessary to provide help and documentation. Any such information should be easy to search, focused on the user’s task, list concrete steps to be carried out, and not be too large. | 4/8 | 50% |

Empathy Interviews

For the initial empathy interviews, I scheduled phone calls with users from each of the four user groups. Note: At this stage of the project student feedback was collected by their teachers. My questions we’re focused around users ability to complete tasks within the system, their likes/dislikes, and thoughts how we can improve the product. After conducting the interviews and synthesizing the results (via an affinity diagram) I was able to prioritize areas for improvement and innovation.

Define

The “Define” phase sets the foundation for the rest of the process, by providing a clear understanding of the problem, the user, and what is expected to be achieved. It helps to ensure that the team stays focused on the user’s needs and does not get sidetracked by personal biases or assumptions.

Top Insights

Building off of what I learned through the empathy interviews, I created a list of prioritized areas for improvement and innovation and reviewed it with the client.

Personas

Next, I used the insights from the empathy test to establish an initial persona for each one of the user types. The personas included basic information about the user and a list of their goals using the product. I used this information to gain perspective on the needs of the user types -which would later help influence and/or validate my design decisions.

Up to this point, I have collaborated with the business, engineers, and end users to have a solid understanding of the product and the desired outcomes. Now, I can use this information to start to visualize ideas for a solution.

Ideate

The purpose of the ideation phase is to use the information gathered from the empathy tests to invent solutions. First, we quickly sketched several ideas on paper. Then, we shared our ideas with each other. Finally, we voted on the top ideas and started creating low-fidelity flow diagrams that would support them.

Crazy Eights

I timeboxed the crazy eights exercise to a one-hour period. We reviewed the personas to understand the needs and wants of our users. Then, we sketched up to eight low-fidelity thumbnails to visualize potential solutions. Finally, we pitched our ideas to the group (one at a time) and asked questions related to the value to our users and the effort to implement them. Finally, we voted on the top solutions to ideate on further as a group.

User Flows

After the top solutions were identified from the crazy eights exercise, I led the group through a user flow charting exercise. During this exercise, I sketched thumbnails of key screens on a whiteboard. We discussed how users could complete their goals/tasks in the least amount of steps necessary. Finally, we establish what we believed would be the most important user flows (aka red routes) to start with.

Prototype

By following the user flows from our ideation phase, I created a low fidelity prototype for the key features and pages. The purpose of our prototype is to understand which of our ideas will work for our users and which will not. Once the prototype was complete I reviewed it with internal stakeholders, completed edits, and prepared for user testing.

Test

Throughout the next two-week iteration, we invited teachers, school administrators, and EdTech SME’s to “walk the wall” and leave feedback via sticky notes. Afterward, I affinity mapped the sticky notes into three categories; Positive, Negative, and Additions. Finally, I used the feedback to make changes to the wireframes, re-tested with the corresponding user types, and prepared for implementation.

Positive

|

Negative

|

New Ideas

|

Implement

Over the next several iterations, I worked closely with the business and engineers to deliver high-fidelity visual and functional specifications. I used the Corporate Brand Guide and Bootstrap UI kit to create an HTML/CSS-based UI Library. Then, I paired with the engineers on implementing the interface. Finally, I was also responsible for quality assurance testing the interface using the BrowserStack testing platform.

Story Mapping

To decide where to begin on the design, I created a storymap that included each of the user types and a list of the enhancements and features that we believe will help increase adoption and engagement.

The enhancements and features that we’re the most requested during our user testing we’re prioritized the highest. Next, we prioritized enhancements that were recommended from the usability analysis. Finally, we prioritized enhancements that were needed for the technical architecture, security, and code debt.

Once all of the features/enhancements were added to the wall and prioritized, we calculated our capacity and established a release schedule for an MVP.

Corporate Brand Guide

Once the wireframe edits were complete, I met with the business to discuss the visual design. I collected the existing branded assets and added them to an online brand guide. The logos, colors, fonts, and icons will serve as the base styling for our UI Library.

UI Kit

My next step was to download and customize the Bootstrap UI kit for Adobe Photoshop. Our UI kit (User Interface kit) contains a collection of design elements and pre-built user interface components that we can use to create a consistent and visually appealing user interface. Our Ui kit include elements such as buttons, forms, icons, and layout templates, as well as guidelines for typography and color schemes. It will be used as a starting point for creating new pages and components, and as a reference for ensuring consistency across different parts of the app. I edited the UI kit to reflect the corporate brand guide and extended it to include our custom components.

High-fidelity Designs

Once the UI kit and component library were branded, I started to convert my wireframes into high-fidelity design specifications. Creating high-fidelity designs enabled me to test and evaluate the design in a realistic way. My designs closely mimicked the final product and included all the details and functionality of the final design. The designs also enabled me to evaluate and test the overall design, user experience, and usability of the product, which helped identify issues and areas for improvement before the final product was developed.

Developer Handoff

The purpose of the developer handoff process is to ensure that the engineers have all the information and assets they need to build the product according to the design and that the design intent is accurately translated into code. This process helps to minimize misunderstandings and delays and ensures that the final product meets the design requirements and specifications.

By including the engineers in all of the design thinking exercises, they are already aware of the solution they will be responsible for implementing. To make the transition a bit smoother from design to development, I created a responsive HTML5, CSS3, and JavaScript UI Library (using Bootstrap) for all of the pages and components.

|

|

|

|---|---|---|

|

||

|

|

|

|

||

Over the next few iterations, I continued to pair with the engineers to implement design specifications and QA test the interface. We released small batches of changes in each iteration to a product-like staging environment. I would test the interface using the automated processes built-in into the BrowserStack testing suite. Once the acceptance criteria were met for the MVP, the engineers released our new solution to the general public.

Empathize

With the MVP now fully functional and most of the features migrated over from the old system, we started widespread user testing on the new platform. I collected feedback by usability testing in classrooms and through feedback forms in the application. In addition, we monitored adoption and engagement analytics (among many others) through the Google Analytics Suite.

Observation & Empathy Interviews

For this next round of empathy interviews, we decided to visit classrooms in person. First, I’ll say that visiting elementary school classrooms for the first time in over thirty years was a nostalgic experience. Second, I was a bit overwhelmed by all of the activities. After settling in, I set up an interview station in the back of the classroom.

“When we first started using it (the students) weren’t even asking to go to the restroom… I was like, ‘Wow. We’re not taking a break here.’ They seem to love it.”

Jacqueline G., Teacher @ Scholars Unlimited

“It’s really rewarding because then you know you’re learning more of this subject and you can do better in actual school.”

Mia B., Student @ Scholars Unlimited

For the first half of my time, I observed the teacher creating and assigning a reading challenge to the students. Then, I watched the students as they used their Chromebooks and Stackup to complete the assignment. This was a particularly remarkable experience for me. Some of the students broke-out into small groups and socialized with each other while reading. Other students put on headphones and we’re reading alone. The students were permitted to read wherever and however they pleased.

During the second half of the meeting, I invited students to meet with me at the interview station. I asked the students what they thought about using Stackup and if they would want us to add anything new. The feedback was overwhelmingly positive, validated our hypothesis, and I was able to understand their perspectives in more detail. These insights would enable me to update our personas with new information.

Persona Validation

My next step was to update our personas with the insights I learned from my in-class interviews and observations. As the product evolves, so do the wants and needs of the users. We must stay focused on the customer throughout the product development process and ensure that everyone is working towards a common goal. I removed information from the personas that are no longer valid and added the updated information. We would continue to repeat this process post-launch as new ideas were introduced, pain points discovered, and new features were added.

Post-Launch Improvements

I continued to work with the business for several more iterations. During that time, I setup and executed a continuous improvement plan. My plan outlined the exercises we would preform to learn new insights and evolve the product. The plan was focused on constant user testing and was to be carried out on a routine cadence following the Design Sprint framework.

| Continuous Improvement Plan (Design Sprints) | |||

| Empathy Interviews | |||

|

|

|

1 Day |

| Design Thinking Workshops | |||

|

|

|

1 Day |

| Prototype & Test | |||

|

|

|

1 Day |

| Developer Handoff | |||

|

|

|

1 Day |

Design Sprint One: Tracking Readability

One of the most requested features from our empathy testing was the ability to track readability. This feature helps the technology learn what reading level the user is at to offer more appropriate suggestions. In addition, users can now track their reading level over time. This would end up being the last feature that I designed for Stackup that was implemented into production.

Design Sprint 2: Advanced Reporting

As the Stackup plugin started to aggregate a significant amount of data on individual students, we started to receive requests from School Administrators on how we could show the data collectively. For my next Design Sprint, I explored using the D3.JS library to display data-driven interfaces and documents.

|

|

Design Sprint 3: Game Mechanic Improvements

During my classroom observation, I saw several groups of students competing with each other. That made me think that Classroom versus Classroom challenges might be another way to motivate students to read more. We would hypothesize that we can increase user engagement by improving the game mechanics.

As part of this feature, I also introduced the concept of “Classroom Currency” as an additional method to reward students. For example, if a classroom wins a reading challenge, each individual student would receive a homework pass to skip one small assignment.

In addition to these new concepts, we also explored evolving our Badge feature to leverage the benefits of the Open Badge platform. This required inventing a custom algorithm that would combine different combinations of categories into a trade, or in other words, we invented several skill paths.

|

|

|

|

|

|

|

|

Conclusion

Working on Stackup was truly one of the most rewarding experiences of my career. I used my UX/UI skills from end-to-end to effectively to re-design a simple yet innovative solution to a real-world problem. I was able to see the impact that my work was having first-hand and I received a lot of praise from our users and the local media. In closing, I enjoyed working in EdTech, particularly with students and teachers. I also enjoyed helping a business grow from hundreds of users to tens of thousands.